Steve Hoberman Core Collection (Data Modeling Master Class Training Manual, Data Modeling Made Simple, Data Model Scorecard, The Rosedata Stone, and Blockchainopoly) on PebbleU and in PDF Instant Downloads

The Steve Hoberman Core Collection

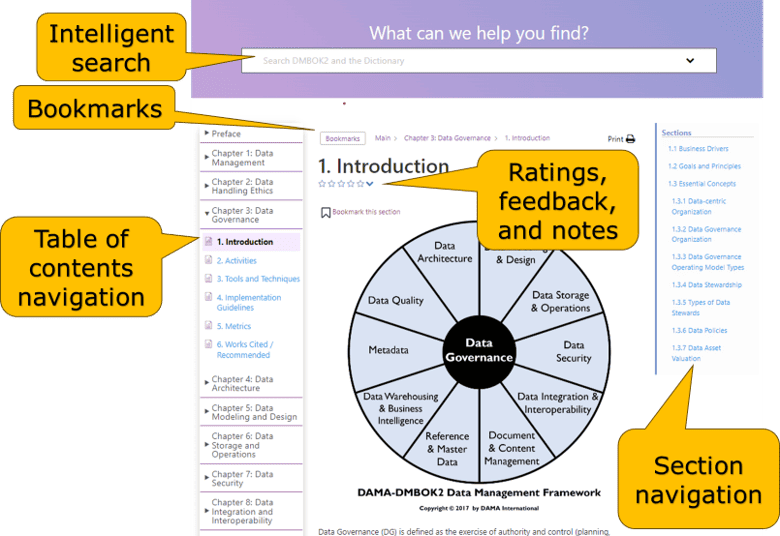

About PebbleU

PebbleU is our interactive platform where you can take courses and read our books on any device, as well as annotate, add bookmarks, and perform intelligence searches. A collection is a group of one or more books and courses that you can annually subscribe to on PebbleU. If a collection contains books, you will also receive PDF Instant Downloads of those books. New features weekly!

See PebbleU in Action

The Master Class is a complete data modeling course containing three modules. After completing Module 1, you will be able to explain the benefits of data modeling, apply the five settings to build a data model masterpiece, and know how and when to use each data modeling component (entities, attributes, representatives, relationships, subtyping, keys, hierarchies, and networks). After completing Module 2, you will be able to create conceptual, logical, and physical relational and dimensional data models. You will also learn how NoSQL data models differ from traditional in terms of structure and modeling approach. After completing Module 3, you will be able to apply data model best practices through the ten categories of the Data Model Scorecard®. You will know not just how to build a data model, but how to build a data model well. Through the Scorecard, you will be able to incorporate supportability and extensibility features into your data model, as well as assess the quality of any data model.

Case studies and many exercises reinforce the material and will enable you to apply these techniques in your current projects. This course assumes no prior data modeling knowledge and, therefore, there are no prerequisites.

You can learn more about the class and the upcoming schedule here.

This book is written in a conversational style that encourages you to read it from start to finish and master these ten objectives:

1. Know when a data model is needed and which type of data model is most effective for each situation

2. Read a data model of any size and complexity with the same confidence as reading a book

3. Build a fully normalized relational data model, as well as an easily navigatable dimensional model

4. Apply techniques to turn a logical data model into an efficient physical design

5. Leverage several templates to make requirements gathering more efficient and accurate

6. Explain all ten categories of the Data Model Scorecard

7. Learn strategies to improve your working relationships with others

8. Appreciate the impact unstructured data has, and will have, on our data modeling deliverables

9. Learn basic UML concepts

10. Put data modeling in context with XML, metadata, and agile development

The Data Model Scorecard is a data model quality scoring tool containing ten categories aimed at improving the quality of your organization’s data models. Many of my consulting assignments are dedicated to applying the Data Model Scorecard to my client’s data models – I will show you how to apply the Scorecard in this book.

This book, written for people who build, use, or review data models, contains the Data Model Scorecard template and an explanation along with many examples of each of the ten Scorecard categories. There are three sections:

In Section I, Data Modeling and the Need for Validation, receive a short data modeling primer in Chapter 1, understand why it is important to get the data model right in Chapter 2, and learn about the Data Model Scorecard in Chapter 3.

In Section II, Data Model Scorecard Categories, we will explain each of the ten categories of the Data Model Scorecard. There are ten chapters in this section, each chapter dedicated to a specific Scorecard category:

- Chapter 4: Correctness

- Chapter 5: Completeness

- Chapter 6: Scheme

- Chapter 7: Structure

- Chapter 8: Abstraction

- Chapter 9: Standards

- Chapter 10: Readability

- Chapter 11: Definitions

- Chapter 12: Consistency

- Chapter 13: Data

In Section III, Validating Data Models, we will prepare for the model review (Chapter 14), cover tips to help during the model review (Chapter 15), and then review a data model based upon an actual project (Chapter 16).

Similar to how the Rosetta Stone provided a communication tool across multiple languages, the Rosedata Stone provides a communication tool across business languages. The Rosedata Stone, called the Business Terms Model (BTM) or the Conceptual Data Model, displays the achievement of a Common Business Language of terms for a particular business initiative.

With more and more data being created and used, combined with intense competition, strict regulations, and rapid-spread social media, the financial, liability, and credibility stakes have never been higher and therefore the need for a Common Business Language has never been greater. Appreciate the power of the BTM and apply the steps to build a BTM over the book’s five chapters:

- Challenges. Explore how a Common Business Language is more important than ever with technologies like the Cloud and NoSQL, and Regulations such as the GDPR.

- Needs. Identify scope and plan precise, minimal visuals that will capture the Common Business Language.

- Solution. Meet the BTM and its components, along with the variations of relational and dimensional BTMs. Experience how several data modeling tools display the BTM, including CaseTalk, ER/Studio, erwin DM, and Hackolade.

- Construction. Build operational (relational) and analytics (dimensional) BTMs for a bakery chain.

- Practice. Reinforce BTM concepts and build BTMs for two of your own initiatives alongside a real example.

This book contains three parts, Explanation, Usage, and Impact.

- Explanation. Part I will explain the concepts underlying blockchain. A precise and concise definition is provided, distinguishing blockchain from blockchain architecture. Variations of blockchain are explored based upon the concepts of purpose and scope.

- Usage. Now that you understand blockchain, where do you use it? The reason for building a blockchain application must include at least one of these five drivers: transparency, streamlining, privacy, permanence, or distribution. Usages based upon these five drivers are shown for finance, insurance, government, manufacturing and retail, utilities, healthcare, nonprofit, and media. Process diagrams will illustrate each usage through inputs, guides, enablers, and outputs. Also examined are the risks of applying these usages, such as cooperation, incentives, and change.

- Impact. Now that you know where to use blockchain, how will it impact our existing IT (Information Technology) environment? Part III explores how blockchain will impact data management. The Data Management Body of Knowledge 2nd Edition (DAMA-DMBOK2) is an amazing book that defines the data management field along with the often complex relationships that exist between the various data management disciplines. Learn how blockchain will impact each of these 11 disciplines: Data Governance, Data Architecture, Data Modeling and Design, Data Storage and Operations, Data Security, Data Integration and Interoperability, Document and Content Management, Reference and Master Data, Data Warehousing and Business Intelligence, Metadata Management, and Data Quality Management.

Once you understand blockchain concepts and principles, you can position yourself, department, and organization to leverage distributed ledger technology.

About Steve

Steve Hoberman has been a data modeler for over 30 years, and thousands of business and data professionals have completed his Data Modeling Master Class. Steve is the author of nine books on data modeling, including The Rosedata Stone and Data Modeling Made Simple. Steve is also the author of Blockchainopoly. One of Steve’s frequent data modeling consulting assignments is to review data models using his Data Model Scorecard® technique. He is the founder of the Design Challenges group, creator of the Data Modeling Institute’s Data Modeling Certification exam, Conference Chair of the Data Modeling Zone conferences, director of Technics Publications, lecturer at Columbia University, and recipient of the Data Administration Management Association (DAMA) International Professional Achievement Award.

Faculty may request complimentary digital desk copies

Please complete all fields.