Data Modeling Zone (DMZ) returns to Phoenix! March 4-6, 2025.

Applications deliver value only when the underlying applications meets user needs. Yet organizations spend millions of dollars and thousands of hours every year developing solutions that fail to deliver. There is so much waste due to poorly capturing and articulating business requirements. Data models prevent this waste by capturing business terminology and needs in a precise form and at varying levels of detail, ensuring fluid communication across business and IT. Data modeling is therefore an essential skill for anyone involved in building an application: from data scientists and business analysts to software developers and database administrators. Data modeling is all about understanding the data used within our operational and analytics processes, documenting this knowledge in a precise form called the “data model”, and then validating this knowledge through communication with both business and IT stakeholders.

DMZ is the only conference completely dedicated to data modeling. DMZ US 2025 will contain five tracks:

- Skills (fundamental and advanced modeling techniques)

- Technologies (AI, mesh, cloud, lakehouse, modeling tools, and more)

- Patterns (reusable modeling and architectural structures)

- Growth (communication and time management techniques)

- Semantics (graphs, ontologies, taxonomies, and more)

The DMZ US 2025 Program

Pre-conference Workshops

Skills

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Assuming no prior knowledge of data modeling, we start off with an exercise that will illustrate why data models are essential to understanding business processes and business requirements. Next, we will explain data modeling concepts and terminology, and provide you with a set of questions you can ask to quickly and precisely identify entities (including both weak and strong entities), data elements (including keys), and relationships (including subtyping). We will discuss the three different levels of modeling (conceptual, logical, and physical), and for each explain both relational and dimensional mindsets.

Steve Hoberman’s first word was “data”. He has been a data modeler for over 30 years, and thousands of business and data professionals have completed his Data Modeling Master Class. Steve is the author of 11 books on data modeling, including The Align > Refine > Design Series and Data Modeling Made Simple. Steve is also the author of Blockchainopoly. One of Steve’s frequent data modeling consulting assignments is to review data models using his Data Model Scorecard® technique. He is the founder of the Design Challenges group, creator of the Data Modeling Institute’s Data Modeling Certification exam, Conference Chair of the Data Modeling Zone conferences, director of Technics Publications, lecturer at Columbia University, and recipient of the Data Administration Management Association (DAMA) International Professional Achievement Award.

I am a senior at Barrett, the Honors College, at Arizona State University, double majoring in Business Data Analytics and Economics, with a minor in Philosophy. I’m the current secretary of the Sailing Club at ASU and the President for the Pacific Coast Collegiate Sailing Conference. I volunteer with DAMA Phoenix as their VP of Outreach, and I also hold a part-time data analytics internship position with Vital Neuro!

The regular organizer of your corporation’s employee social events, such as picnics and other gatherings, faces the challenge of relieving the manual management burden. To streamline this process, we need to develop a new database to support an application called the Event Registration and Management System (ERAMS), which will replace the outdated methods of email and paper-based management.

Your session facilitator, Steve Sewell, will introduce the case study and also assume the role of the business partner responsible for organizing these outings. During this workshop, attendees will collaborate in groups to address various aspects of the logical data modeling required for ERAMS. The workshop’s primary objective is to enhance your data modeling skills through peer learning and guidance from the facilitator. Additionally, you will have opportunities to practice asking pertinent questions of your business partner to apply to the logical data model.

Given our limited time, we will adopt a “cooking show” approach, where some of the data requirements (ingredients) will be pre-prepared and measured. Your groups task will be to assemble these components. At the end of the session, we will have a “tasting” or showcase of some of the completed work.

Various modeling tools will be provided to facilitate group collaboration, offering you the opportunity to observe and explore the use and capabilities of different data modeling tools. You will also be welcome bring your own data modeling tool.

Steve Sewell graduated from Illinois State University in Business Data Processing, where he gained expertise in various programming languages, requirements gathering, and normalized database design. With a career spanning over three decades in the insurance industry, Steve has excelled in many roles including his most recent as a Senior Data Designer at State Farm. His current work involves providing strategic guidance for enterprise-wide initiatives involving large-scale Postgres and AWS implementations, while adhering to best practices in database design. Steve is actively involved in imparting new Data Designers with the knowledge of data modeling best practices.

communication

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Change is no longer the status quo, disruption is. Over the past five years major disruptions have happened in all our lives that have left some of us reeling while others stand tall egging on more. All people approach disruption differently. Some seem to adjust, and quickly looked for ways to optimize or create efficiencies for the upcoming change. Other dig their heels in, question everything and insist on all the answers, in detail, right away. Then there are the ones that are ready and willing to take on disruption. In this workshop you will find out which profile best suits you. How that applies to big organizational efforts like data governance, AI and the impact on data management and data modeling. Finally, how you can bridge the divide between these profiles to harness the disruption and calm the chaos.

- Disruption Research

- What is the sustainable disruption model?

- Are you a Disrupter, Optimizer or Keeper: Take the Quiz

- Working with others

- Three take-aways

Laura Madsen is a global data strategist, keynote speaker, and author. She advises data leaders in healthcare, government, manufacturing, and tech. Laura has spoken at hundreds of conferences and events inspiring organizations and individuals alike with her iconoclastic disrupter mentality. Laura is a co-founder and partner in a Minneapolis-based consulting firm Moxy Analytics to converge two of her biggest passions: helping companies define and execute successful data strategies and radically challenging the status quo.

Storytelling is a time-honored human strategy for communicating effectively, building community, and creating networks of trust. In the first half of this 3-hour workshop you will learn accessible and memorable tools for crafting and telling stories about yourself, your experiences, and values. In the second half, you will apply what you learn to your professional contexts, including how to use stories and storytelling to:

- Explain the value of data modeling

- Validate a data model

- Interpret analytics

Liz Warren, a fourth-generation Arizonan, is the faculty director and one of the founders of the South Mountain Community College Storytelling Institute in Phoenix, Arizona. Her textbook, The Oral Tradition Today: An Introduction to the Art of Storytelling is used at colleges around the nation. Her recorded version of The Story of the Grail received a Parents’ Choice Recommended Award and a Storytelling World Award. The Arizona Humanities Council awarded her the Dan Schilling Award as the 2018 Humanities Public Scholar. In 2019, the American Association of Community Colleges awarded her the Dale Parnell Distinguished Faculty Award. Recent work includes storytelling curricula for the University of Phoenix and the Nature Conservancy, online webinars for college faculty and staff around the country, events for the Heard Museum, the Phoenix Art Museum, the Desert Botanical Garden, and the Children’s Museum of Phoenix, and in-person workshops for Senator Mark Kelly’s staff and Governor Ducey’s cabinet.

Dr. Travis May has been a part of South Mountain Community College for 23 years and is currently the Interim Dean of Academic Innovation. His role entails providing leadership for South Mountain Community College (SMCC) Construction Trades Institute, advancing faculty’s work with Fields of Interest, and strengthening our localized workforce initiatives. Dr. May holds a Bachelor of Arts in Anthropology from Arizona State University, Master of Education in Educational Leadership and a Doctor of Education in Organizational Leadership and Development from Grand Canyon University.Dr. May has immersed himself as a Storytelling faculty member for the past nine years and is highly regarded as an instructor. He approaches his classes with student success in mind and knowing that he is also altering the course of his students’ lives. His classes are fun, engaging, and challenging and he encourages students to embrace their inner voice so they can share their stories with the world.

certification

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Unlock the potential of your data management career with the Certified Data Management Professional (CDMP) program by DAMA International. As the global leader in Data Management, DAMA empowers professionals like you to acquire the skills, knowledge, and recognition necessary to thrive in today’s data-driven world. Whether you’re a seasoned data professional or an aspiring Data Management expert, the CDMP certification sets you apart, validating your expertise and opening doors to new career opportunities.

CDMP is recognized worldwide as the gold standard for Data Management professionals. Employers around the globe trust and seek out CDMP-certified individuals, making it an essential credential for career advancement.

All CDMP certification levels require approving the Data Management Fundamental exam. This workshop is aimed at letting you know what to expect when taking the exam and how to define your best strategy to answer it. It is not intended to teach you Data Management but introduce you to CDMP and to do a brief review of the most relevant topics to keep in mind. After our break for lunch, you will have the opportunity to take the exam in its modality of PIYP (Pay If You Pass)!

Through the first part of this workshop (9:00-12:30), you will get:

- Understanding of how CDMP works, what type of questions to expect, and best practices when responding to the exam.

- A summary of the most relevant topics of Data Management according to the DMBoK 2nd Edition

- A series of recommendations for you to define your own strategy on how to face the exam to get the best score possible

- A chance to answer the practice exam to test your strategy

Topics covered:

- Introduction to CDMP

- Overview and summary of the most relevant points of DMBoK Knowledge Areas:

- Data Management

- Data Handling Ethics

- Data Governance

- Data Architecture

- Data Modeling

- Data Storage and Operations

- Data Integration

- Data Security

- Document and Content Management

- Master and Reference Data

- Data Warehousing and BI

- Metadata Management

- Data Quality

3. Analysis of sample questions

We will break for lunch and come back full of energy to take the CDMP exam in the modality of PIYP (Pay if you Pass), which is a great opportunity.

Those registered to this workshop will get an Event CODE to purchase the CDMP exam with no charge before taking the exam. The Event CODE will be emailed along with instructions to enroll in the exam. Once this is done, access to the Practice Exam is available, and strongly recommended to execute it as many times as possible before the exam.

Considerations:

- PIYP means that if you approve the exam (all exams are approved by getting 60% of right answers) you must pay for it (US$300.00) before leaving the room, so be ready with your credit card. If you are expecting a score equal or above 70 and you get 69, you still must pay the exam.

- You must bring your own personal device (laptop or tablet, not mobile phone), with Chrome navigator.

- Job laptops are not recommended as they might have firewalls that will not allow you to enter the exam platform.

- If English is not your main language you must enroll in the exam as ESL (English as a Second Language), and you may wish to install a translator as Chrome extension.

- Data Governance and Data Quality specialty exams will also be available

If you are interested in taking this workshop, please complete this form to receive your Event CODE and to secure a spot to take the exam.

Semantics

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Learn how to model an RDF Graph (Resource Description Framework), the underpinnings of a true inference capable knowledge graph.

We will cover the fundamentals of RDF Graph Data Models using the Financial Industry Business Ontology (FIBO), and see how to create a domain-specific graph model on top of the FIBO Ontology. Learn how to:

- Build an RDF graph

- Data model with RDF

- Validate data with SHACL (Shape Constraint Language)

- Query RDF graphs with SPARQL

- Integrate LLMs with a graph model

Sumit Pal is an Ex-Gartner VP Analyst in Data Management & Analytics space. Sumit has more than 30 years of experience in the data and Software Industry in various roles spanning companies from startups to enterprise organizations in building, managing and guiding teams and building scalable software systems across the stack from middle tier, data layer, analytics and UI using Big Data, NoSQL, DB Internals, Data

Warehousing, Data Modeling, Data Science and middle tier. He is also a published author of a book on SQLEngines and developed a MOOC course on Big Data.

With the unpredictable trajectory of AI over the near future, ranging from a mild AI winter to AI potentially getting out of our control, integrating AI into the enterprise should be spearheaded by highly curated BI systems led by the expertise of human BI analysts and subject matter experts. In this session, we will explore how these two facets of intelligence can synergize to transform businesses into dynamic, highly adaptive entities, in a way that is both cautious and enhances competitive capability.

Based on concepts from the book “Enterprise Intelligence,” attendees will gain an in-depth understanding of building a resilient enterprise by integrating BI structures into an Enterprise Knowledge Graph (EKG). The key topic is the integration of the roles of Knowledge Graphs, Data Catalogs, and BI-derived structures like the Insight Space Graph (ISG) and Tuple Correlation Web (TCW). The session will also emphasize the importance of data mesh methodology in enabling the seamless onboarding of more BI sources, ensuring robust data governance and metadata management.

Key Takeaways for Attendees:

- Drive Safe AI Integration: Discover how to use highly curated BI data to enhance the accuracy and depth of your analyses and understand the potential of combining BI with AI for more insightful and predictive analytics.

- Architectural Frameworks and Data Mesh: Learn strategies to integrate diverse data sources into a cohesive Enterprise Knowledge Graph using data mesh methodology, ensuring robust data governance and metadata management.

- Create a Resilient Enterprise: Gain insights into creating an intelligent enterprise capable of making innovative decisions by harnessing the synergy between BI and AI. Understand how to build and maintain a robust data infrastructure that drives organizational success.

Explore the transformative potential of Enterprise Intelligence and equip your organization with the tools and knowledge to navigate and excel in the complexities of the modern world.

Eugene Asahara, with a rich history of over 40 years in software development, including 25 years focused on business intelligence, particularly SQL Server Analysis Services (SSAS), is currently working as a Principal Solutions Architect at Kyvos Insights. His exploration of knowledge graphs began in 2005 when he developed Soft-Coded Logic (SCL), a .NET Prolog interpreter designed to modernize Prolog for a data-distributed world. Later in 2012, Eugene ventured into creating Map Rock, an project aimed at constructing knowledge graphs that merge human and machine intelligence across numerous SSAS cubes. While these initiatives didn’t gain extensive adoption at the time, the lessons learned have proven invaluable. With the emergence of Large Language Models (LLMs), building and maintaining knowledge graphs has become practically achievable, and Eugene is leveraging his past experience and insights from SCL and Map Rock to this end. He resides in Eagle, Idaho, with his wife, Laurie, a celebrated watercolorist known for her award-winning work in the state, and their two cats,

The Main Event

Skills

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Restore a balance between a code-first approach which results in poor data quality and unproductive rework, and too much data modeling which gets in the way of getting things done

You will learn: Inspired by Eric Ries’ popular book “Domain-Driven Design” published in 2003 for software development, the principles have been applied and adapted to data modeling, resulting in a pragmatic approach to designing data structures at the initial phase of metadata management.

In this session, you will learn how to strike a balance between: not too much data modeling that may have been in the way of getting things done, and not enough data modeling which often results in suboptimal applications and poor data quality, one of the causes of AI “hallucinations.

You will also learn how to tackle complexity at the heart of data, and how to reconcile Business and IT through a shared understanding of the context and meaning of data.

Pascal Desmarets is the founder and CEO of Hackolade, a data modeling tool for NoSQL databases, storage formats, REST APIs, and JSON in RDBMS. Hackolade pioneered Polyglot Data Modeling, which is data modeling for polyglot data persistence and data exchanges. With Hackolade’s Metadata-as-Code strategy, data models are co-located with application code in Git repositories as they evolve and are published to business-facing data catalogs to ensure a shared understanding of the meaning and context of your data. Pascal is also an advocate of Domain-Driven Data Modeling.

When developing a data analytics platform, how do we bring together business requirements on the one hand with specific data structures to be created on the other? Model-Based Business Analysis (MBBA) as a process model that is embedded in an agile context can make a valuable contribution here. MBBA is based on specified use cases, runs through several phases, and generates many artifacts relevant to development activities – in particular conceptual data models that serve as templates for EDW implementations. The MBBA process concludes with concrete logical data structures for an access layer, usually star schema definitions. The participants will get an overview of all phases, objectives and deliverables of the MBBA process.

- You will learn how conceptual data models help with requirements analysis for data analytics platforms.

- You will learn which steps and deliverables are necessary for such an analysis and what role data governance plays here.

- You will learn how all these steps and models can be combined in a holistic process to provide significant added value for development.

Peer M. Carlson is Principal Consultant at b.telligent (Germany) with extensive experience in the field of Business Intelligence & Data Analytics. He is particularly interested in data architecture, data modeling, Data Vault, business analysis, and agile methodologies. As a dedicated proponent of conceptual modeling and design, Peer places great emphasis on helping both business and technical individuals enhance their understanding of overall project requirements. He holds a degree in Computer Science and is certified as a “BI Expert” by TDWI Europe.

In today’s data-driven world, organizations face the challenge of managing vast amounts of data efficiently and effectively. Traditional data warehousing approaches often fall short in addressing issues related to scalability, flexibility, and the ever-changing nature of business requirements. This is where Data Vault, a data modeling methodology designed specifically for data warehousing, comes into play.

In this introductory session, we will explore the fundamentals of Data Vault, a contemporary approach that simplifies the process of capturing, storing, and integrating data from diverse sources.

Participants will gain a comprehensive understanding of the key concepts and components of Data Vault, including hubs, links, and satellites. We will discuss how it promotes scalability, auditability, and adaptability, making it an ideal choice for organizations looking to future-proof their data solutions.

By the end of this session, beginners will have a solid foundation in Data Vault and be equipped with the knowledge to start their journey towards mastering this contemporary data warehousing technique.

Join us to discover how Data Vault can change your approach to data management and unlock the full potential of your organization’s data assets.

Dirk Lerner is an experienced independent consultant and managing director of TEDAMOH. He is considered a global expert on BI architectures, data modeling and temporal data. Dirk advocates flexible, lean, and easily extendable data warehouse architectures.

Through the TEDAMOH Academy, Dirk coaches and trains in the areas of temporal data, data modeling certification, data modeling in general, and on Data Vault in particular.

As a pioneer for Data Vault and FCO-IM in Germany he wrote various publications, is a highly acclaimed international speaker at conferences and author of the TEDAMOH-blog and Co-Author of the book ‘Data Engine Thinking’.

High quality, integrated data demands careful attention to applying the right degree of normalization (normal form) for your data modeling requirements. Ignoring the different normal forms can lead to duplicative data and processing, difficulties in data granularity causing integration and query problems, etc. However, the normal forms range from the nearly obvious to the esoteric so it is important to understand how to apply the normal forms. In this session, you will learn about the different normal forms and some example scenarios where the normal form is applicable.

Pete Stiglich is the founder of Data Principles, LLC – a consultancy focused on data architecture/modeling, data management, and analytics. Pete has over 25 years of experience in these topics as a consultant managing teams of architects and developers to deliver outstanding results and is an industry thought leader on Conceptual/Business Data Modeling. My motto is “Model the business before modeling the solution”.

Where does the modern data Architect fit in today’s world? With Generative AI, automation, code generation and remote work how does the Data Architect not only survive but thrive in this field? We will take a short look back, then get a current state of affairs, and show how leveraging solid fundamentals can prepare you and your organization for the future.

Doug “The Data Guy” Needham started his career as a Marine Database Administrator supporting operational systems that spanned the globe in support of the Marine Corps missions. Since then, Doug has worked as a consultant, data engineer, and data architect for enterprises of all sizes. He is currently working as a Data Scientist, tinkering with Graphs and Enrichment Platforms while showing others how to get more meaning from data. He always focuses on the Data Operations side of ensuring data moves throughout the Enterprise in the most efficient manner.

Maybe you never heard of CDMP, maybe you heard the name before, but you are not sure what it represents, you saw the CDMP Exams in the conference program and wonder what it is all about, or you are here to take the exam. This session is presented by DAMA International and will answer all your questions concerning CDMP:

- What is Certified Data Management Professional program.

- Why is it relevant to you.

- How can you prepare to be certified.

- What are the enrolment options.

- What happens during an examination session.

Michel is an IT professional with more than 40 years of experience, mostly in business software development. He has been involved in multiple data modeling and interoperability activities. Over the last 7 years, he worked on data quality initiatives and well as data governance implementation projects. He has a master’s degree in Software Engineering and is certified CDMP (master) since 2019. He currently consults on a large application development project as lead data management/modeling and teaches introduction to data governance, data quality, and data security at Université de Sherbrooke.

Michel is the current VP Professional Development for DAMA International.

Technologies

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

The Align > Refine > Design approach covers conceptual, logical, and physical data modeling (schema design and patterns), combining proven data modeling practices with database-specific features to produce better applications. Learn how to apply this approach when creating a DynamoDB schema. Align is about agreeing on the common business vocabulary so everyone is aligned on terminology and general initiative scope. Refine is about capturing the business requirements. That is, refining our knowledge of the initiative to focus on what is essential. Design, is about the technical requirements. That is, designing to accommodate DynamoDB’s powerful features and functions.

You will learn how to design effective and robust data models for DynamoDB.

Pascal Desmarets is the founder and CEO of Hackolade, a data modeling tool for NoSQL databases, storage formats, REST APIs, and JSON in RDBMS. Hackolade pioneered Polyglot Data Modeling, which is data modeling for polyglot data persistence and data exchanges. With Hackolade’s Metadata-as-Code strategy, data models are co-located with application code in Git repositories as they evolve and are published to business-facing data catalogs to ensure a shared understanding of the meaning and context of your data. Pascal is also an advocate of Domain-Driven Data Modeling.

The Align > Refine > Design approach covers conceptual, logical, and physical data modeling (schema design and patterns), combining proven data modeling practices with database-specific features to produce better applications. Learn how to apply this approach when creating a Elasticsearch schema. Align is about agreeing on the common business vocabulary so everyone is aligned on terminology and general initiative scope. Refine is about capturing the business requirements. That is, refining our knowledge of the initiative to focus on what is essential. Design, is about the technical requirements. That is, designing to accommodate Elasticsearch’s powerful features and functions.

You will learn how to design effective and robust data models for Elasticsearch.

Rafid is a data modeler who entered the field at the young age of 22, holding an undergraduate degree in Biology and Mathematics from the University of Ottawa. He was inducted into the DMC Hall of Fame by the Data Modeling Institute in July 2020, making him the first Canadian and 10th person worldwide to receive this honor. Rafid possesses extensive experience in creating standardized financial data models and utilizing various modeling techniques to enhance data delivery mechanisms. He is well-versed in data analytics, having conducted in-depth analyses of Capital Markets, Retail Banking, and Insurance data using both relational and NoSQL models. As a speaker, Rafid shared his expertise at the 2021 Data Modeling Zone Europe conference, focusing on the reverse engineering of physical NoSQL data models into logical ones. Rafid and his team recently placed second in an annual AI-Hackathon, focusing on a credit card fraud detection problem. Alongside his professional pursuits, Rafid loves recording music and creating digital art, showcasing his creative mind and passion for innovation in data modeling.

Fully Communication Oriented Information Modeling (FCOIM) is a groundbreaking approach that empowers organizations to communicate with unparalleled precision and elevate their data modeling efforts. FCOIM leverages natural language to facilitate clear, efficient, and accurate communication between stakeholders, ensuring a seamless data modeling process. With the ability to generate artifacts such as JSON, SQL, and DataVault, FCOIM enables data professionals to create robust and integrated data solutions, aligning perfectly with the project’s requirements.

You will learn:

- The fundamentals of FCOIM and its role in enhancing communication within data modeling processes.

- How natural language modeling revolutionizes data-related discussions, fostering collaboration and understanding.

- Practical techniques to generate JSON, SQL, and DataVault artifacts from FCOIM models, streamlining data integration and analysis.

Get ready to be inspired by Marco Wobben, a seasoned software developer with over three decades of experience! Marco’s journey in software development began in the late 80s, and since then, he has crafted an impressive array of applications, ranging from bridge automation, cash flow and decision support tools, to web solutions and everything in between.

As the director of BCP Software, Marco’s expertise shines through in his experience in developing off-the-shelf end products, automate Data Warehouses, and create user-friendly applications. But that’s not all! Since 2001, he has been the driving force behind CaseTalk, the go-to CASE tool for fact-oriented information modeling.

Join us as we delve into the fascinating world of data and information modeling alongside Marco Wobben. Discover how his passion and innovation have led to the support of Fully Communication Oriented Information Modeling (FCO-IM), a game-changing approach used in institutions worldwide. Prepare to be captivated by his insights and experience as we explore the future of data modeling together!

The data mesh paradigm brings a transformative approach to data management, emphasizing domain-oriented decentralized data ownership, data as a product, self-serve infrastructure, and federated computational governance. AI can play a crucial role in architectural alignment. We’ll cover modeling a robust data mesh covering:

- Domain-Centric Model

- Data Product Interfaces

- Schema Evolution and Versioning

- Metadata and Taxonomy

- Development

Quality and Observability - Self-Service Infrastructure

- Governance & Compliance

You will learn:

1. Modeling to leverage Data Mesh design’s value in creating accountable, interpretable, and explainable end-user consumption

2. Bridging between Data Fabric and Data Mesh architectures

3. Increasing end-user efficacy of AI and Analytics initiatives with a mesh model

Anshuman Sindhar is a seasoned enterprise data architect, process automation, analytics and AI/ML practitioner with expertise in building and managing data solutions in the domains of finance, risk management, customer management, and regulatory compliance.

During his career spanning 25+ years, Anshuman has been a professional services leader, adept at selling and managing multi-million dollar complex data integration projects from start to finish with major system integrator firms including KPMG, BearingPoint, IBM, Capco, Paradigm Technologies, and Quant16 to achieve customer’s digital transformation objectives in a fast-paced highly collaborative consulting environment. He currently works as an independent data architect.

The future of enterprise systems lies in their ability to inherently support data exchange and aggregation, crucial for advanced reporting and AI-driven insights. This presentation will explore the development and implementation of a groundbreaking platform designed to achieve these goals.

We aim to demonstrate how our 3D integration approach—encompassing data, time, and systems—facilitates secure data exchange across supply chains and complex conglomerates, such as government entities, which require the coordination of multiple interconnected systems. Our vision leverages AI to automatically analyze existing systems and generate sophisticated 3D systems. These systems, when networked together, enable structured and automated data exchange and support the seamless creation of data warehouses.

Central to our open-source platform is a procedural methodology for system creation. By utilizing core data models and a high-performance primary key structure, our solution ensures that primary keys remain consistent across various systems, irrespective of data transfers.

Join us as we delve into the unique features of our platform, highlighting its capabilities in data integration, AI application, and system migration. Discover how our approach can revolutionize enterprise systems, paving the way for enhanced data sharing and operational efficiency.

Blair Kjenner has been architecting and developing enterprise software for over forty years. Recently, he had the opportunity to reverse engineer many different systems for an organization to help them find missed revenues. The project resulted in recovering millions of uncollected dollars. This inspired Blair to evaluate how systems get created and why we struggle with integration. Blair then formulated a new methodology for developing enterprise systems specifically to deal with the software development industry’s key issue in delivering fully integrated systems to organizations at a reasonable cost. Blair is passionate about contributing to an industry he has enjoyed so much.

Kewal Dhariwal is a dedicated researcher and developer committed to advancing the information technology industry through education, training, and certifications. Kewal has built many standalone and enterprise systems in the UK, Canada, and the United States and understands how we approach enterprise software today and the issues we face. He has worked closely with and presented with the leading thinkers in our industry, including John A. Zachman (Zachman Enterprise Framework), Peter Aiken (CDO, Data Strategy, Data Literacy), Bill Inmon (data warehousing to data lakehouse), and Len Silverston (Universal Data Models). Kewal is committed to advancing our industry by continually looking for new ways to improve our approach to systems development, data management, machine learning, and AI. Kewal was instrumental in creating this book because he immediately realized this approach was different due to his expansive knowledge of the industry and by engaging his broad network of experts to weigh in on the topic and affirm his perspective.

There are two thoughts of school when it comes to applicational development: “We use relational because it allows proper data modeling and is use-case flexible” versus “We use JSON documents because it’s simple and flexible.”

Oracle’s latest database, 23ai, offers a new paradigm: JSON Relational Duality, where data is both JSON and Tables and can be accessed with Document APIs and SQL depending on the use-case. This sessions explains the concepts behind a new technology that combines the best of JSON and relational.

You will learn:

- The differences, strengths, and weaknesses of JSON versus relational.

- How a combined model is possible and how it simplifies application design and evolution.

- How SQL and NoSQL are no longer technology choices but just different means to work with the same data in the same system

Beda Hammerschmidt studied computer science and later earned a PhD in indexing XML data. He joined Oracle as a software developer in 2006. He initiated the support for JSON in Oracle and is co-author of the SQL/JSON standard. Beda is currently managing groups supporting semi-structured data in Oracle (JSON, XML, Full Text, etc).

During your database modeling efforts, do you sometimes find yourself not having the time to do things as well as you’d like? Maybe you say to yourself, “I’ll come back and do that little thing later,” and that later opportunity just doesn’t materialize. Wouldn’t it be nice to have someone to help with the work, but you know that due to budget issues your company cannot hire another person right now? Just maybe AI can be your personal assistant to share some of the work, so you can reach higher levels of quality and confidence with the end result of the data model.

Why Attend? Your session facilitator, Steve Sewell, will demonstrate innovative and practical ways to apply AI in data modeling. These ideas will hopefully inspire you to stretch your imagination and apply AI even further than what is covered in this session. Let’s kick start that imagination by experimenting in a demo with the following applications:

- Defining Attributes: Learn how AI can help define attributes in the context of an entity, ensuring clarity and precision.

- Example Attribute Values: See how AI can create example attribute values to demonstrate how the data will work in practice.

- Documenting Requirements: Discover methods for using AI to document “original” business requirements for existing databases.

- Conceptual Grouping: Understand how AI can group entities conceptually based on provided concept names, facilitating better organization and understanding.

- Nullability Recommendations: Get AI-driven recommendations on having the correct nullabilities for your data fields.

- Datatype Tweaks: Explore how AI can suggest tweaks to datatypes, ensuring optimal performance and compatibility.

Don’t Miss Out! Harness the power of AI to revolutionize your database modeling tasks. Whether you’re looking to improve accuracy, save time, or stay ahead in the ever-evolving tech landscape, this session is your gateway to working smarter.

Steve Sewell graduated from Illinois State University in Business Data Processing, where he gained expertise in various programming languages, requirements gathering, and normalized database design. With a career spanning over three decades in the insurance industry, Steve has excelled in many roles including his most recent as a Senior Data Designer at State Farm. His current work involves providing strategic guidance for enterprise-wide initiatives involving large-scale Postgres and AWS implementations, while adhering to best practices in database design. Steve is actively involved in imparting new Data Designers with the knowledge of data modeling best practices.

Case Studies

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

The integration of Generative AI into marketing data modeling marks a transformative step in understanding and engaging customers through data-driven strategies. This presentation will explore the definition and capabilities of Generative AI, such as predictive analytics and synthetic data generation. We will highlight specific applications in marketing, including personalized customer interactions, automated content creation, and enhanced real-time decision-making in digital advertising.

Technical discussions will delve into the methodologies for integrating Generative AI with existing marketing systems, emphasizing neural networks and Transformer models. These technologies enable sophisticated behavioral predictions and necessitate robust data infrastructures like cloud computing. Additionally, we will address the ethical implications of Generative AI, focusing on the importance of mitigating biases and maintaining transparency to uphold consumer trust and regulatory compliance.

Looking forward, we will explore future trends in Generative AI that are poised to redefine marketing strategies further, such as AI-driven dynamic pricing and emotional AI for deeper consumer insights. The speech will conclude with strategic recommendations for marketers on how to leverage Generative AI effectively, ensuring they remain at the cutting edge of technological innovation and competitive differentiation.

You will:

- Understand the Role of Generative AI in Marketing Data Modeling in Personalization and Efficiency: Learn how Generative AI can be utilized to analyze consumer data, enhance targeting accuracy, automate content creation, and improve overall marketing efficiency through personalized customer interactions.

- Recognize the Importance of Ethical Practices in AI Implementation of Data Modeling and Execution: Identify the ethical considerations necessary when integrating AI into marketing strategies, including how to address data privacy, avoid algorithmic bias, and maintain transparency to ensure responsible use of AI technologies.

- Anticipate and Adapt to Future AI Trends in Marketing: Acquire insights into emerging AI trends such as dynamic pricing and emotional AI, and develop strategies to incorporate these advancements into marketing practices to stay ahead in a rapidly evolving digital landscape.

Dr. Kyle Allison, renowned as The Doctor of Digital Strategy, brings a wealth of expertise from both the industry and academia in the areas of e-commerce, business strategy, operations, digital analytics, and digital marketing. With a remarkable track record spanning more than two decades, during which he ascended to C-level positions, his professional journey has encompassed pivotal roles at distinguished retail and brand organizations such as Best Buy, Dick’s Sporting Goods, Dickies, and the Army and Air Force Exchange Service.

Throughout his career, Dr. Allison has consistently led the way in developing and implementing innovative strategies in the realms of digital marketing and e-commerce. These strategies are firmly rooted in his unwavering dedication to data-driven insights and a commitment to achieving strategic excellence. His extensive professional background encompasses a wide array of business sectors, including the private, public, and government sectors, where he has expertly guided digital teams in executing these strategies. Dr. Allison’s deep understanding of diverse business models, coupled with his proficiency in navigating B2B, B2C, and DTC digital channels, and his mastery of both the technical and creative aspects of digital marketing, all highlight his comprehensive approach to digital strategy.

In the academic arena, Dr. Allison has been a pivotal figure in shaping the future generation of professionals as a respected professor & mentor, imparting knowledge in fields ranging from digital marketing, analytics, and e-commerce to general marketing and business strategies at prestigious institutions nationwide, spanning both public and private universities and colleges. Beyond teaching, he has played a pivotal role in curriculum development, course creation, and mentorship of doctoral candidates as a DBA doctoral chair.

As an author, Dr. Allison has significantly enriched the literature in business strategy, analytics, digital marketing, and e-commerce through his published works in Quick Study Guides, textbooks, journal articles, and professional trade books. He skillfully combines academic theory with practical field knowledge, with a strong emphasis on real-world applicability and the attainment of educational objectives.

Dr. Allison’s educational background is as extensive as his professional accomplishments, holding a Doctor of Business Administration, an MBA, a Master of Science in Project Management, and a Bachelor’s degree in Communication Studies.

For the latest updates on Dr. Allison’s work and portfolio, please visit DoctorofDigitalStrategy.com.

Do you want to communicate better with consumers and creators of enterprise data? Do you want to effectively communicate within IT application teams about the meaning of data? Do you want to break down data silos and promote data understanding? Conceptual data models are the answer!

Many companies skip over the creation of conceptual data models and go right to logical or physical modeling. They miss reaping the benefits that conceptual models provide:

- Facilitate effective communication about data between everyone who needs to understand basic data domains and definitions.

- Break down data silos to provide enterprise-wide collaboration and consensus.

- Present a high-level, application neutral overview of your data landscape.

- Identify and mitigate data complexities.

You will learn what a conceptual model is, how to create one on the back of a napkin in 10 minutes or less, and how to use that to drive communication at many levels.

Kasi Anderson has been in the data world for close to 25 years serving in multiple roles including data architect, data modeler, data warehouse design and implementation, business intelligence evangelist, data governance specialist, and DBA. She is passionate about bridging the gap between business and IT and working closely with business partners to achieve corporate goals through the effective use of data. She loves to examine data ecosystems and figure out how to extend architectures to meet new requirements and solve challenges. She has worked in many industries including manufacturing and distribution, banking, healthcare, and retail.

In her free time, Kasi loves to read, travel, cook, and spend time with her family. She enjoys hiking the beaches and mountains in the Pacific Northwest and loves to find new restaurants and wineries to enjoy.

Laurel Sturges, a seasoned data professional, has been an integral part of the tech community helping businesses better understand and utilize data for over 40 years. She refers to problem solving as an adventure where she really finds passion in the process of discussing and defining data, getting into all the details including metadata, definitions, business rules, and everything that goes along with it.

Laurel is an expert in creating and delivering quality business data models and increasing communication between key business stakeholders and IT groups. She provides guidance for clients to make informed decisions so her partners can build a quality foundation for success.

She has a diverse background serving in a multitude of roles educating individuals as a peer and as an external advisor. She has served in many industries including manufacturing, aviation, and healthcare. Her specialization is relational data theory and usage of multiple modeling tools.

Outside of the data world, Laurel is learning to garden and loves to can jams and fresh fruits and veggies. Laurel is an active supporter of Special Olympics of Washington and has led her company’s Polar Plunge for Special Olympics team for 10 years, joyfully running into Puget Sound in February!

This presentation is aimed at anyone looking to make their data warehouse initiatives and products more agile and improve collaboration between teams and departments.

Flight Levels will be introduced—a lightweight and pragmatic approach to business agility. It helps organizations visualize and manage their work items across different operational levels to ensure effective collaboration and alignment with strategic goals.

The presentation explains the three Flight Levels:

- Strategic Level: Focuses on executing the long-term goals and strategic initiatives to set the direction for the entire organization.

- Coordination Level: Coordinates work between various teams and departments to manage interactions and dependencies.

- Operational Level: Concentrates on daily work and project execution by operational teams.

By visualizing work, improving communication, and continuously optimizing collaboration, Flight Levels provide a hands-on approach to enhancing agility in any organization. Practical examples from Deutsche Telekom will illustrate how Flight Levels are successfully used to increase transparency, efficiency, and value creation.

KERSTIN LERNER is a Flight Levels Guide at Deutsche Telekom and an independent agile coach. She has more than 15 years of international experience in a variety of agile roles. Through her coaching and training and as a knowledge catalyst, she helps leaders, organizations and teams to be more successful in their business.

Creating measurable and lasting business value from data involves much more than just curating a data set or creating a data product, and then feeding it into a BI, ML, or AI tool. This talk will explain 11 core organizational capabilities required to create business value from data and information and explain how companies have successfully leveraged these capabilities in their BI, ML, and AI initiatives. Examples will also be given of companies that have failed in these initiatives by ignoring these foundational principles.

Larry Burns has worked in IT for more than 40 years as a data architect, database developer, DBA, data modeler, application developer, consultant, and teacher. He holds a B.S. in Mathematics from the University of Washington, and a Master’s degree in Software Engineering from Seattle University. He most recently worked for a global Fortune 200 company as a Data and BI Architect and Data Engineer. He contributed material on Database Development and Database Operations Management to the first edition of DAMA International’s Data Management Body of Knowledge (DAMA-DMBOK) and is a former instructor and advisor in the certificate program for Data Resource Management at the University of Washington in Seattle. He has written numerous articles for TDAN.com and DMReview.com and is the author of Building the Agile Database (Technics Publications LLC, 2011), Growing Business Intelligence (Technics Publications LLC, 2016), and Data Model Storytelling (Technics Publications LLC, 2021). His forthcoming book is titled, “A Vision for Value”.

Data modeling is on life support. Some say it’s dead. The traditional practices are increasingly ignored, ridiculed, and forgotten by modern data practitioners. Diehard data modeling practitioners are wandering in the forest. To put it politely, the world of data modeling is in an existential crisis.

At the same time, data modeling is more critical than ever. With AI’s rising popularity, many organizations rush to incorporate it into their infrastructure. Without consideration of the underlying data framework, the result will be unpleasant for many organizations.

In this talk, Joe argues that data modeling is a key enabler for success with AI and other data initiatives. For data modeling to survive, we must return to basics and revamp data modeling to work with modern business workflows and technologies. Long live data modeling!

Joe Reis is a “recovering data scientist” and co-author of the best-selling O’Reilly book, Fundamentals of Data Engineering. He’s a business-minded data nerd who’s worked in the data industry for 20 years, with responsibilities ranging from statistical modeling, forecasting, machine learning, data engineering, data architecture, and almost everything else.

Joe hosts the popular data podcasts The Monday Morning Data Chat and The Joe Reis Show, keynotes major data conferences worldwide, and advises and invests in next-generation data product companies.

Data is a cornerstone of contemporary organisational success, a notion exemplified by financial entities such as Nedbank, which navigate both market and regulatory landscapes. This talk will chart Nedbank’s voyage in data modelling, centering on a significant data migration initiative. The narrative will be framed by the PPP paradigm-People, Process, and Technology.We will share the human element challenges in team development and retention, our internal and external process collaborations, and the pivotal role these play in our modelling endeavours. The technology segment will cover our adaptation and utilisation of the IBM banking and financial markets data model, detailing the implementation, customisation, and the technological infrastructure supporting our data and processes. Insights into our successes, learnings, and areas for enhancement will be shared, culminating in a contemplation of our project’s triumphs and future prospects.

Thembeka Snethemba Jiyane is a distinguished data modeler at Nedbank Group, renowned for her expertise in data analysis, warehousing, and business intelligence. With a robust background in SQL and data modeling tools, she excels in delivering top-tier data solutions. Her career includes pivotal roles at the South African Reserve Bank, BCX, and Telkom, which have all contributed to her profound knowledge and experience. Thembeka holds a BSc in Computer Science and Mathematics and an Honours BSc in IT, reflecting her commitment to continuous learning. Her analytical prowess and problem-solving skills are matched by a passion for leveraging data to drive organizational transformation, making her an invaluable contributor to any enterprise.

How can we use the niche technologies and power of LLMs and Gen AI to automate data modeling? Can we feed business requirements with correct prompt engineering to enable architects to use LLMs to create domain, logical and physical models? Can it help recommend entities, attributes and their definitions? How can Gen AI be used to integrate company specific guidelines into these models? Can it prompt on potential attributes that may contain PII data? Can this further extent into testing the performance of query patterns on these tables and suggest improvements before this over to development team use? Please join us to see how we can unlock the potential of LLMs and Gen AI as data architects.

Engineering Director

Senior Engineering Manager

Semantics

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

In this session, we will explore advanced structures within knowledge graphs that go beyond traditional ontologies and taxonomies, offering deeper insights and novel applications for data modeling. As knowledge graphs gain prominence in various domains, their potential for representing complex relationships and dynamics becomes increasingly valuable. This presentation will delve into five special graph structures that extend the capabilities of standard knowledge graph frameworks:

- Trophic Cascades: Understanding ecological hierarchies and interactions, and how these concepts can be applied to model dependencies and influence within organizational data.

- Non-deterministic Finite-State Automata (NFA): Leveraging NFAs to model complex decision processes and workflows that capture the probabilistic nature of real-world operations.

- Performance Management Strategy Maps: Using strategy maps to visualize and align organizational objectives, facilitating better performance management through strategic relationships and causal links.

- Bayesian Belief Networks, Causal Diagrams, and Markov Blankets: Implementing Bayesian networks and causal diagrams to model probabilistic relationships and infer causality within data. Utilizing Markov blankets to isolate relevant variables for a particular node in a probabilistic graphical model, enabling efficient inference and robust decision-making by focusing on the local dependencies.

- Workflows: Structuring workflows within knowledge graphs to represent and optimize business processes, enabling more efficient and adaptive operations.

By examining these structures, attendees will gain insights into how knowledge graphs can be utilized to model and manage intricate systems, drive innovation in data modeling practices, and support advanced business intelligence frameworks. This session is particularly relevant for data modelers, BI professionals, and knowledge management experts who are interested in pushing the boundaries of traditional knowledge graphs.

Eugene Asahara, with a rich history of over 40 years in software development, including 25 years focused on business intelligence, particularly SQL Server Analysis Services (SSAS), is currently working as a Principal Solutions Architect at Kyvos Insights. His exploration of knowledge graphs began in 2005 when he developed Soft-Coded Logic (SCL), a .NET Prolog interpreter designed to modernize Prolog for a data-distributed world. Later in 2012, Eugene ventured into creating Map Rock, an project aimed at constructing knowledge graphs that merge human and machine intelligence across numerous SSAS cubes. While these initiatives didn’t gain extensive adoption at the time, the lessons learned have proven invaluable. With the emergence of Large Language Models (LLMs), building and maintaining knowledge graphs has become practically achievable, and Eugene is leveraging his past experience and insights from SCL and Map Rock to this end. He resides in Eagle, Idaho, with his wife, Laurie, a celebrated watercolorist known for her award-winning work in the state, and their two cats, Venus and Bodhi.

Knowledge Graphs (KGs) are all around us and we use them everyday. Many of the emerging data management products like data catalogs/fabric and MDM products leverage knowledge graphs as their engines.

A knowledge graph is not a one-off engineering project. Building a KG requires collaboration between functional domain experts, data engineers, data modelers, and key sponsors. It also combines technology, strategy, and organizational aspects (focusing only on technology leads to a high risk of failure).

KGs are effective tools for capturing and structuring a large amount of structured, unstructured, and semi-structured data. As such, KGs are becoming the backbone of many systems, including semantic search engines, recommendation systems, conversational bots, and data fabric.

This session guides data and analytics professionals to show the value of knowledge graphs and how to build semantic applications.

Sumit Pal is an Ex-Gartner VP Analyst in Data Management & Analytics space. Sumit has more than 30 years of experience in the data and Software Industry in various roles spanning companies from startups to enterprise organizations in building, managing and guiding teams and building scalable software systems across the stack from middle tier, data layer, analytics and UI using Big Data, NoSQL, DB Internals, Data

Warehousing, Data Modeling, Data Science and middle tier. He is also a published author of a book on SQLEngines and developed a MOOC course on Big Data.

In the ever-evolving landscape of data management, capturing and leveraging business knowledge is paramount. Traditional data modelers have long excelled at designing logical models that meticulously structure data to reflect the intricacies of business operations. However, as businesses grow more interconnected and data-driven, there is a compelling need to transcend these traditional boundaries.

Join us for an illuminating session where we explore the intersection of logical data modeling and semantic data modeling, unveiling how these approaches can synergize to enhance the understanding and utilization of business knowledge. This talk is tailored for data modelers who seek to expand their expertise and harness the power of semantic technologies.

We’ll delve into:

- The foundational principles of logical data modeling, emphasizing how it captures and stores essential business data.

- The transformative role of semantic data modeling in building knowledge models that contextualize and interrelate business concepts.

- Practical insights on integrating logical data models with semantic frameworks to create a comprehensive and dynamic knowledge ecosystem.

- Real-world examples showcasing the benefits of semantic modeling in enhancing data interoperability, enriching business intelligence, and enabling advanced analytics.

Prepare to embark on a journey that not only reinforces the core strengths of your data modeling skills but also opens new avenues for applying semantic methodologies to capture deeper, more meaningful business insights. This session promises to be both informative and inspiring, equipping you with the knowledge to bridge the gap between traditional data structures and the next generation of business knowledge modeling.

Don’t miss this opportunity to stay ahead in the data modeling domain and transform the way you capture and utilize business knowledge.

Jeffrey Giles is the Principal Architect at Sandhill Consultants, with over 18 years of experience in information technology. A recognized professional in Data Management, Jeffrey has shared his knowledge as a guest lecturer on Enterprise Architecture at the Boston University School of Management.

Jeffrey’s experience in information management includes customizing Enterprise Architecture frameworks and developing model-driven solution architectures for business intelligence projects. His skills encompass analyzing business process workflows and data modeling at the conceptual, logical, and physical levels, as well as UML application modeling.

With an understanding of both transactional and data warehousing systems, Jeffrey focuses on aligning business, data, applications, and technology. He has contributed to designing Data Governance standards, data glossaries, and taxonomies. Jeffrey is a certified DCAM assessor and trainer, as well as a DAMA certified data management practitioner. He has also written articles for the TDAN newsletter. Jeffrey’s practical insights and approachable demeanor make him a valuable speaker on data management topics.

The Align > Refine > Design approach covers conceptual, logical, and physical data modeling (schema design and patterns), combining proven data modeling practices with database-specific features to produce better applications. Learn how to apply this approach when creating a Neo4j schema. Align is about agreeing on the common business vocabulary so everyone is aligned on terminology and general initiative scope. Refine is Neo4j capturing the business requirements. That is, refining our knowledge of the initiative to focus on what is essential. Design, is about the technical requirements. That is, designing to accommodate Neo4j’s powerful features and functions.

You will learn how to design effective and robust data models for Neo4j.

David Fauth is a Senior Field Engineer at Neo4j. He enjoys helping Neo4j users become successful through proper modeling of their data. He has expertise in Neo4j scalability, system architecture, geospatial, streaming data and security and is currently focusing on solving large data volume challenges using Neo4j.

Data modelers fear not: your knowledge, skills and expertise are also applicable to ontologies! Learn a four-step process to translate your data model into an ontology. Note: this presentation includes no tool usage. You will learn:

- How to translate your data model into an ontology

- The planning steps and investigations before you begin, followed by a four-step translation process

Norman Daoust founded his consulting company Daoust Associates in 2001. He became addicted to modeling as a result of his numerous healthcare data integration projects. He was a long-time contributor to the healthcare industry standard data model Health Level Seven Reference Information Model (RIM). He sees patterns in both data model entities and their relationships. Norman enjoys training and making complex ideas easy to understand.

Keynotes

DataOps, GitOps, and Docker containers are changing the role of Data Modeling, now at the center of end-to-end metadata management.

Success in the world of self-services analytics, data meshes, as well as micro-services and event-driven architectures can be challenged by the need to maintain interoperability of data catalogs/dictionaries with the constant evolution of schemas for databases and data exchanges.

In other words, the business side of human-readable metadata management must be up-to-date and in-sync with the technical side of machine-readable schemas. This process can only work at scale if it is automated.

It is hard enough for IT departments to keep in-sync schemas across the various technologies involved in data pipelines. For data to be useful, the business users must have an up-to-date view of the structures, complete with context and meaning.

In this session, we will review the options available to create the foundations for a data management framework providing architectural lineage and curation of metadata management.

Wall Street Journal bestselling and award-winning author Isabella Maldonado wore a gun and badge in real life before turning to crime writing. A graduate of the FBI National Academy in Quantico and the first Latina to attain the rank of captain in her police department, she retired as the Commander of Special Investigations and Forensics. During more than two decades on the force, her assignments included hostage negotiator, department spokesperson, and precinct commander. She uses her law enforcement background to bring a realistic edge to her writing, which includes the Special Agent Nina Guerrera series (being developed by Netflix for a feature film starring Jennifer Lopez), the Special Agent Daniela Vega series, and the Detective Veranda Cruz series. She also co-authors the Sanchez and Heron series with Jeffery Deaver. Her books are published in 24 languages and sold in 52 countries. For more information, visit www.isabella maldonado.com.

Grounding data governance in organizational value is the easiest way to assure continued attention from and resources for your program. This session presents a number of actual case studies in data governance applied. Dollar amounts are featured to help professionals use these as models upon which to base their own efforts.

Peter Aiken is acknowledged to be a top data management (DM) authority. As a practicing data manager, consultant, author, and researcher, he has been actively performing and studying DM for more than thirty years. His expertise has been sought by some of the world’s most important organizations, and his achievements have been recognized internationally. He has held leadership positions and consulted with more than 150 organizations in 27 countries across numerous industries, including intelligence, defense, banking, healthcare, telecommunications, and manufacturing. He is a sought-after keynote speaker and author of multiple publications, including his popular The Case for the Chief Data Officer and Monetizing Data Management, and he hosts the longest running and most successful webinar dedicated to data management. Peter is the Founding Director of Data Blueprint, a consulting firm that helps organizations leverage data for competitive advantage and operational efficiencies. He is also an Associate Professor of Information Systems at Virginia Commonwealth University (VCU), past President of the International Data Management Association (DAMA-I), and Associate Director of the MIT International Society of Chief Data Officers.

Sponsor DMZ US 2025

If you are interested in sponsoring DMZ US 2025, in Phoenix, Arizona, March 4-6, please complete this form and we will send you the sponsorship package.

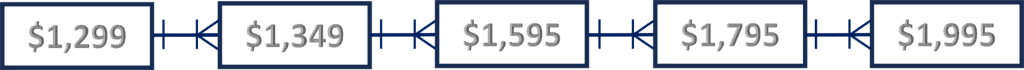

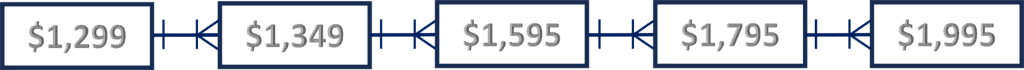

Lock in the lowest prices today - Prices increase as tickets sell

Although there are no refunds, substitutions can be made without cost. For every three that register from the same organization, the fourth person is free!

Why our current prices are so low

We keep costs low without sacrificing quality, and can pass on this savings with lower registration prices. Similar to the airline industry, however, as seats fill prices will rise. As people register for our event, the price of the tickets will go up. So, the least expensive ticket price will be today! If you would like to register your entire team and combine DMZ with a in-person team-building event, complete this form and we will contact you within 24 hours with discounted prices. If you are a student or work full-time for a not-for-profit organization, please complete this form and we will contact you within 24 hours with discounted prices.

DMZ sponsors

Platinum

Gold

Silver

Location and hotels

Desert Ridge is just a 20 minute ride from the Phoenix Sky Harbor International Airport. There are many amazing hotels nearby, and the two below are next to each other, include complimentary buffet breakfast, and share the same free shuttle to the conference site which is two miles away. We are holding a small block of rooms at each hotel, and these discounted rates are half the cost of nearby hotels, so book early!

The Sleep Inn

At the discounted rate of $163/night, the Sleep Inn is located at 16630 N. Scottsdale Road, Scottsdale, Arizona 85254. You can call the hotel directly at (480) 998-9211 and ask for the Data Modeling Zone (DMZ) rate, or book directly online by clicking here.

At the discounted rate of $163/night, the Sleep Inn is located at 16630 N. Scottsdale Road, Scottsdale, Arizona 85254. You can call the hotel directly at (480) 998-9211 and ask for the Data Modeling Zone (DMZ) rate, or book directly online by clicking here.

The Hampton Inn

At the discounted rate of $215/night, the Hampton Inn is located at 16620 North Scottsdale Road, Scottsdale, Arizona 85254. You can call the hotel directly at (480) 348-9280 and ask for the Data Modeling Zone (DMZ) rate, or book directly online by clicking here.